Unveiling MLOps: The Backbone of Scalable AI

Welcome to TechStation, SDG's hub for innovation in data and analytics! This article dives into MLOps—the critical discipline behind deploying, monitoring, and maintaining machine learning models—exploring its principles, practices, and role in unlocking AI’s full potential.

Interested in something else? Check all of our content here.

In today's data-driven world, organizations are increasingly relying on machine learning to gain a competitive edge.

However, getting a model from a research environment into production is a complex process fraught with challenges.

Today we see the proliferation of AI models such as ChatGPT, Gemini, PaLM.

Behind these models is a large engineering investment by hyperscalers including well-structured and governed MLOps pipelines.

What is ML? Machine learning (ML) is a branch of artificial intelligence (AI) and computer science that focuses on using data and algorithms to enable AI to imitate the way that humans learn, gradually improving its accuracy.

Machine Learning Operations (MLOps) is the discipline of deploying and maintaining machine learning models in production reliably and efficiently.

It bridges the gap between data scientists who create models and IT operations teams responsible for deploying and managing them.

MLOps provide a structured approach to the entire machine learning lifecycle, from data ingestion to model deployment and monitoring.

By automating and standardizing processes, MLOps enables organizations to deploy models faster, improve model performance, and reduce operational costs.

Ultimately, MLOps is essential for realizing the full potential of machine learning and driving business value.

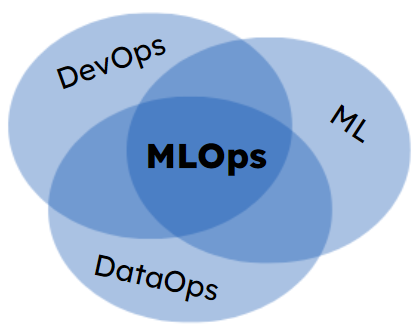

What is the difference between DataOps, DevOps and MLOps?

MLOps historically has originated from DevOps principles that are applied to software development and have been reapplied to the development of Data Models.

To understand MLOps in detail, let us look a little at the concepts of DevOps and DataOps.

DevOps is a set of practices that align software development with IT operations to efficiently create and deliver applications.

It fosters collaboration between teams to eliminate roadblocks and improve software deployment and monitoring.

DataOps is a set of practices, processes, and technologies that combine an integrated and process-oriented perspective on data with automation to improve quality, speed, and collaboration.

DataOps promotes a culture of continuous improvement in the area of data analytics and incorporates continuous monitoring and validation processes to detect and correct data quality issues, enabling teams to measure and track data accuracy, completeness, consistency, and timeliness.

MLOps is an extension of DevOps and DataOps tailored for machine learning processes.

It streamlines ML workflows from data processing to model deployment and maintenance.

By integrating DevOps principles with ML-specific requirements, MLOps accelerates deployment, improves model performance, and fosters collaboration across teams without losing focus on data.

| Methodology |

Focus |

Goal |

Key Practices |

| DevOps |

Software development and IT operations |

Shorten software development lifecycle, deliver features frequently |

Continuous integration, continuous delivery, continuous deployment, infrastructure as code, automation |

| DataOps |

Data management and analytics |

Improve data quality, consistency, and accessibility |

Data ingestion, transformation, quality, governance, orchestration |

| MLOps |

Machine learning model lifecycle |

Streamline ML model development and deployment |

Model training, deployment, monitoring, retraining, experiment tracking |

Why use MLOps?

Just as software becomes non-maintainable and can proliferate with bugs if not properly controlled through automated procedures (Devops), several problems can also occur during the development of ML models if ML pipelines (MLOps) are not used.

Let us take the example of a team of data scientists independently developing a model without release guidelines.

The release will prove to be inefficient and the deployment procedures may soon no longer be maintainable.

Without automated data control, the team above, which develops the models and perhaps does not have a deep view of the data, may soon incur violations of data protection laws and expose the company and security risks.

Moreover, when it comes to models, they need to be retrained.

Without a cyclic and adequate retraining process, models will be less effective in predicting new data with a resulting loss of performance.

Data scientist teams often have no idea of the architecture on which models are to run as they often run their developments on a sandbox environment.

If the code integration is not continuous and the code is not tested on the production environment, this can generate scalability issues and also generate unexpected costs.

Last but not least, models need monitoring, both infrastructurally and in terms of performance.

If a model is degrading its performance, I need to understand why through continuous monitoring and be able to intervene at all levels.

What are the principles of MLOps?

MLOps is therefore a set of processes that helps prevent the bad scenarios just mentioned above.

Let's see in detail how and what are the pillars that make up the MLOps processes.

Data Management

While data is not part of the release pipeline for DevOps processes, for the release of models, data is crucial and there can be no MLOps pipeline that does not have control over data.

Data management is a critical function that ensures data is collected, stored, processed, and used effectively to support business operations and decision-making.

For these reasons, the DataOps pipelines help MLOps, or rather, are an integral part of it.

The goal of DataOps is to deliver value faster by creating predictable delivery and change management of data, data models and related artifacts.

DataOps uses technology to automate the design, deployment and management of data delivery with appropriate levels of governance, and it uses metadata to improve the usability and value of data in a dynamic environment.

The core aspect here is that Data Management cannot be replicated both in ML pipelines and on Data Platform pipelines, it must be a shared asset.

This guarantees less development effort, more coherency, more data quality and more manutenability.

Automation

Automating the end-to-end ML lifecycle enhances efficiency and reliability.

Key components include:

- Data Ingestion: automating the process of collecting and integrating data from various sources

- Preprocessing: streamlining data cleaning and transformation tasks to ensure data is ready for modeling

- Model Training: using automation to manage the training process, including hyperparameter tuning and model selection

- Deployment: automating the deployment of ML models into production environments, ensuring they are readily available for use

- Monitoring and Maintenance: continuously tracking model performance and automating maintenance tasks to keep models up-to-date and functioning correctly.

Continuous X

Expanding and translating the CD/CI concept to ML projects leading to specific advantages:

- Continuous Integration: ensuring seamless and automated integration of code changes into a shared repository, promoting consistency and reliability in ML model development

- Continuous Delivery: ensuring that every change to the model or data can be automatically prepared for production, enhancing agility and responsiveness

- Validation: automatically validating models to confirm they meet specified performance criteria before deployment

- Deployment: automated deployment to ensure models are efficiently moved to production environments with proper resource management (depending on the model usage - real-time or batch/long running).

Monitoring and Observability

Establishing robust monitoring and observability practices for ML models in production is essential for maintaining performance and reliability:

- Data Quality Monitoring: ensuring the data fed into the models remains consistent and of high quality

- Real-Time Monitoring: continuously tracking the performance of ML models to detect deviations from expected behavior

- Performance Metrics: monitoring various performance metrics such as accuracy, precision, recall, and latency to detect issues promptly

- Alerting and Reporting: setting up alerts for when performance metrics fall below thresholds and generating reports to provide insights into model behaviour and health.

Governance and Compliance

Implementing governance and compliance measures ensures the ethical and responsible use of ML models.

This includes:

- Data Privacy: ensuring that data used for training and inference complies with privacy regulations and is handled securely

- Security: protecting ML models and data from unauthorized access and potential breaches

- Fairness: ensuring that ML models do not perpetuate or amplify biases, promoting fairness and equity in their predictions

- Regulatory Compliance: adhering to industry-specific regulations and standards, ensuring that ML models are legally compliant and ethically sound.

MLOps with hyperscalers

Hyperscalers like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) have recognized the importance of MLOps and offer a comprehensive suite of tools and services to streamline the machine learning lifecycle.

These platforms provide managed infrastructure, pre-built components, and automation capabilities, enabling organizations to focus on model development and optimization rather than operational overhead.

By leveraging the power of hyperscalers, businesses can accelerate their ML initiatives, improve model performance, and gain a competitive edge.

This exploration will delve into the key MLOps capabilities offered by these leading cloud providers, highlighting their unique strengths and how they can be applied to various industry use cases.

Hyperscalers provide all the tools, mostly fully managed, that enable companies to develop their MLOps pipelines in a short time and without prohibitive financial investments through pay-per-use instruments.

Conclusions

In today's market, the focus is often on doing everything right away without structuring the release processes.

In a world where data changes rapidly, it is unthinkable to release models without an MLOps pipeline.

Would you use an electrical system if you knew it had never been tested?

Why would you use a model that has not been properly tested?

Often the cost of developing an MLOps pipeline seems like a waste, but if you look at it as a long term investment strategy, it is certainly worth it for the stability and control it can provide when implemented correctly.

In our experience, if you want to implement a professional solution, you cannot ignore this investment, and you should definitely go for well-structured MLOps pipelines if you want to maintain a reliable and stable model over time.

Discover how a well-structured MLOps strategy can accelerate your business efficiency and improve the performance of your machine learning models. Book a meeting with our experts to discuss how we can optimize your model lifecycle and take your business to the next level.

.png?width=2000&name=SDG%20-%20Logo%20White%20(1).png)