Streamline workflows with Snowflake's API capabilities.

Welcome to TechStation, SDG's space for exploring the latest trends and innovations in data and analytics! In this article, we'll dive into Snowflake’s Python UDFs to see how they enable seamless REST API calls, enhancing data integration and simplifying complex operations directly within the platform.

Interested in something else? Check all of our content here.

Snowflake has been continuously expanding its capabilities, evolving into a comprehensive platform that redefines what developers can achieve.

Each feature pushes the boundaries of data management, enabling us to reinterpret and redesign our workflows.

In today's data-driven world, being able to simplify complex operations by centralizing them within a single platform is invaluable in order to be able to.

This reduces the reliance on external tools and minimizes the risk of failure-prone interactions.

In this article, we will explore a common use case that includes several of Snowflake's newly-released features.

Specifically, we will delve into Snowflake’s Python UDFs to trigger REST API calls directly from within the platform.

This approach not only simplifies workflows but also allows for real-time data integration, offering a robust solution for modern enterprises.

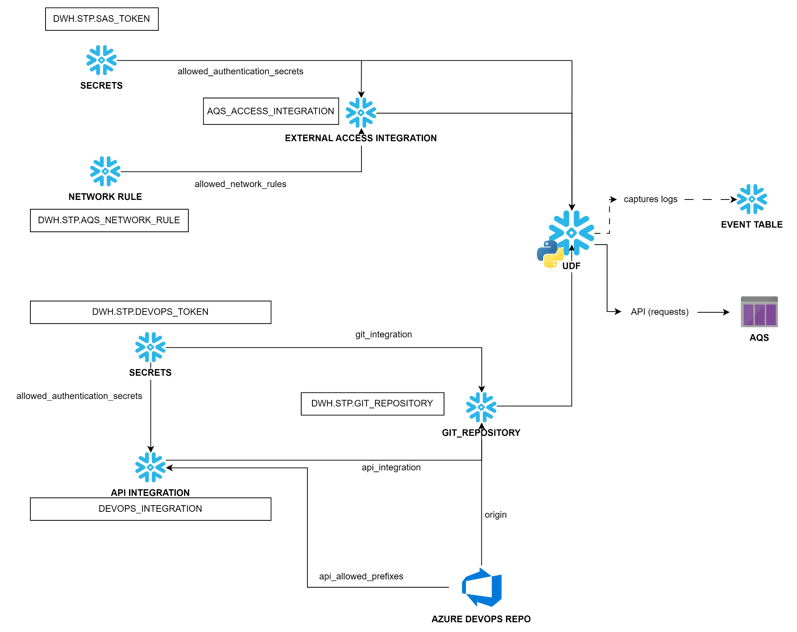

Project architecture overview

Target set-up

As sensitive data is central to our applications, ensuring its secure storage and management is fundamental.

Recognizing this need, Snowflake has introduced Secrets, a schema-level object designed to safeguard sensitive information with robust security measures.

For more details, you can refer to the official Snowflake documentation.

In our implementation, we will leverage Snowflake Secrets to securely store and manage the credentials necessary for authenticating with external services.

Specifically, we will demonstrate how to use these secrets to securely interact with Azure DevOps and Azure Queue Services.

CREATE SECRET DWH.STP.SAS_TOKEN

TYPE = GENERIC_STRING

SECRET_STRING = '<sas_token>'

COMMENT = 'expires on 2025-06-21'

;

To establish secure communication with external services, we need to create a Network Rule within Snowflake, which requires us to operate as the schema owner.

This rule will specify that traffic is directed toward a particular external destination, defined by the HOST_PORT for egress.

For detailed guidance, you can refer to the official Snowflake documentation.

CREATE OR REPLACE NETWORK RULE DWH.STP.AQS_NETWORK_RULE

MODE = EGRESS

TYPE = HOST_PORT

VALUE_LIST = ('<storage_account>.queue.core.windows.net')

;

It's important to note that the Network Rule object in Snowflake itself does not determine whether the network traffic is permitted or prohibited.

Instead, we must explicitly define its nature within the rule that utilizes it, in our scenario through an External Access Integration.

Within this integration, we use the ALLOWED_NETWORK_RULES parameter to specify which systems we intend to reach and the ALLOWED_AUTHENTICATION_SECRETS parameter to define which secrets can be accessed within the User-Defined Function (UDF).

For a comprehensive overview, refer to the Snowflake External Network Access documentation.

CREATE OR REPLACE NETWORK RULE DWH.STP.AQS_NETWORK_RULE

MODE = EGRESS

TYPE = HOST_PORT

VALUE_LIST = ('<storage_account>.queue.core.windows.net')

;

grant usage on integration DEV_ACCESS_INTEGRATION to role UDF_ROLE;

By setting up Network Rules and utilizing Snowflake Secrets, we ensure that our applications can securely and efficiently communicate with Azure Queue Services.

With these elements in place, we can move forward, shifting our focus to Git Integration, exploring how to incorporate version control into our workflow to enhance collaboration and simplify the development process.

Git versioning

The core of our implementation is Python-based, and to ensure our code is not confined solely to Snowflake, we can leverage Git Integration.

This feature provides a way to interact between Snowflake and our version control system (Azure DevOps), allowing developers to work locally and perform all required tests before pushing each feature.

For more information on this process, refer to the Snowflake Git Integration documentation.

In a manner similar to the set up of the External Access Integration, we need to securely store our Personal Access Token (PAT) in a secret.

This secret will then be referenced within another type of integration—the API Integration—required to establish a secure link to our repository.

CREATE OR REPLACE SECRET DWH.STP.DEVOPS_TOKEN

TYPE = password

username = '<username>'

password = '<pwd>'

comment = 'expires on 2025-06-21'

;

CREATE OR REPLACE API INTEGRATION DEVOPS_INTEGRATION

API_PROVIDER = git_https_api

API_ALLOWED_PREFIXES = ('https://dev.azure.com/<organization>)

ALLOWED_AUTHENTICATION_SECRETS = (DWH.STP.DEVOPS_TOKEN)

ENABLED = TRUE

;

CREATE OR REPLACE GIT REPOSITORY DWH.STP.GIT_REPOSITORY

API_INTEGRATION = DEVOPS_INTEGRATION

GIT_CREDENTIALS = DWH.STP.DEVOPS_TOKEN

ORIGIN = 'https://dev.azure.com/<organization>/<project>/_git/<repository>

;

Once all required pieces of information have been defined, we can proceed with the definition of our function.

create or replace function DWH.STP.CALL_AQS(perimeter STRING)

returns string

language python

runtime_versione = 3.9

handler = 'aqs_trigger.main'

external_access_integrations = (AQS_ACCESS_INTEGRATION)

packages = ('requests','pyyaml')

imports = ('@DWH.STP.GIT_REPOSITORY/branches/main/app/utils.py')

'@DWH.STP.GIT_REPOSITORY/branches/main/app/utils.py')

secrets = ('aqs_sas_token' = DWH.STP.SAS_TOKEN )

;

As we can see, the definition includes several elements:

- The External Access Integration we have created to specify the outgoing network traffic

- The packages that are required for the code to run (to have an overview of all the possibilities: select * from information_schema.packages)

- The files to be imported from the Git Integration

- The secrets, permitted within the Access Integration, which can be accessed within the main handler

As a final step, in order to be able to debug our function by reading the logs we include by means of the logging package in Python, we want to set up an event table and define it at account level.

USE ROLE ACCOUNTADMIN;

CREATE EVENT TABLE DWH.STP.EVENT_LOGGER;

ALTER ACCOUNT SET EVENT_TABLE = DWH.STP.EVENT_LOGGER;

ALTER FUNCTION DWH.STP.CALL_AQS(STRING) SET LOG_LEVEL = INFO;

Conclusion

In this article, we have demonstrated how to leverage Snowflake’s capabilities to initiate REST API calls directly from the platform.

By meticulously configuring secrets, network rules, external access integrations, and event tables, we've implemented a streamlined solution that eliminates the need for additional tools.

Centralizing these operations within Snowflake guarantees that organizations can enhance their levels of efficiency and security.

This approach can be extended to a wide range of use cases where interaction between the platform and external systems is needed, reducing the time between data extraction and its availability for use and allowing DataOps teams to guarantee high levels of security and data quality.

.png?width=2000&name=SDG%20-%20Logo%20White%20(1).png)